Learn what AI auditing is, why it’s critical for accountability and compliance, and how organizations use it to manage AI risk and build trustworthy systems.

"Over half of leading IT and data analytics respondents say their organization has an AI governance framework implemented, either as a dedicated framework (12%) or as an extension of other governance frameworks (34%)," according to Gartner's AI Governance Frameworks for Responsible AI report.

This statistics highlights a growing reality: as AI becomes a cornerstone of decision-making in industries like finance and healthcare—whether it’s determining credit scores or diagnosing illnesses—concerns about transparency, fairness, and accountability are rising just as quickly.

That’s why AI auditing isn’t just a buzzword; it’s a critical tool to ensure AI systems are ethical, compliant, and trustworthy. Think of it as a safeguard that keeps innovation on the right track while building confidence in the technology we rely on every day.

In this guide, we’ll break down everything you need to know about AI auditing. From what it is and why it matters to the different types of audits and best practices for implementation, we’ve got you covered with practical insights to help you navigate this essential process with confidence. Let’s dive in!

AI auditing is all about ensuring AI systems meet ethical, legal, and operational standards—and it's a key step toward building trustworthy, responsible technology. It’s more than just checking if an AI works; it’s about looking deeper. Are the data inputs high-quality and unbiased? Are the outcomes fair? Does the decision-making process make sense and follow the rules?

“Ethical AI ‘Begins With Responsible Innovators and AI Governance,” says Reggie Townsend, vice president of the SAS Data Ethics Practice

Here’s what a typical AI audit focuses on:

By auditing AI systems, we ensure they’re not just functional but also trustworthy and aligned with the values that matter most. It’s a powerful way to build confidence in AI while empowering innovation.

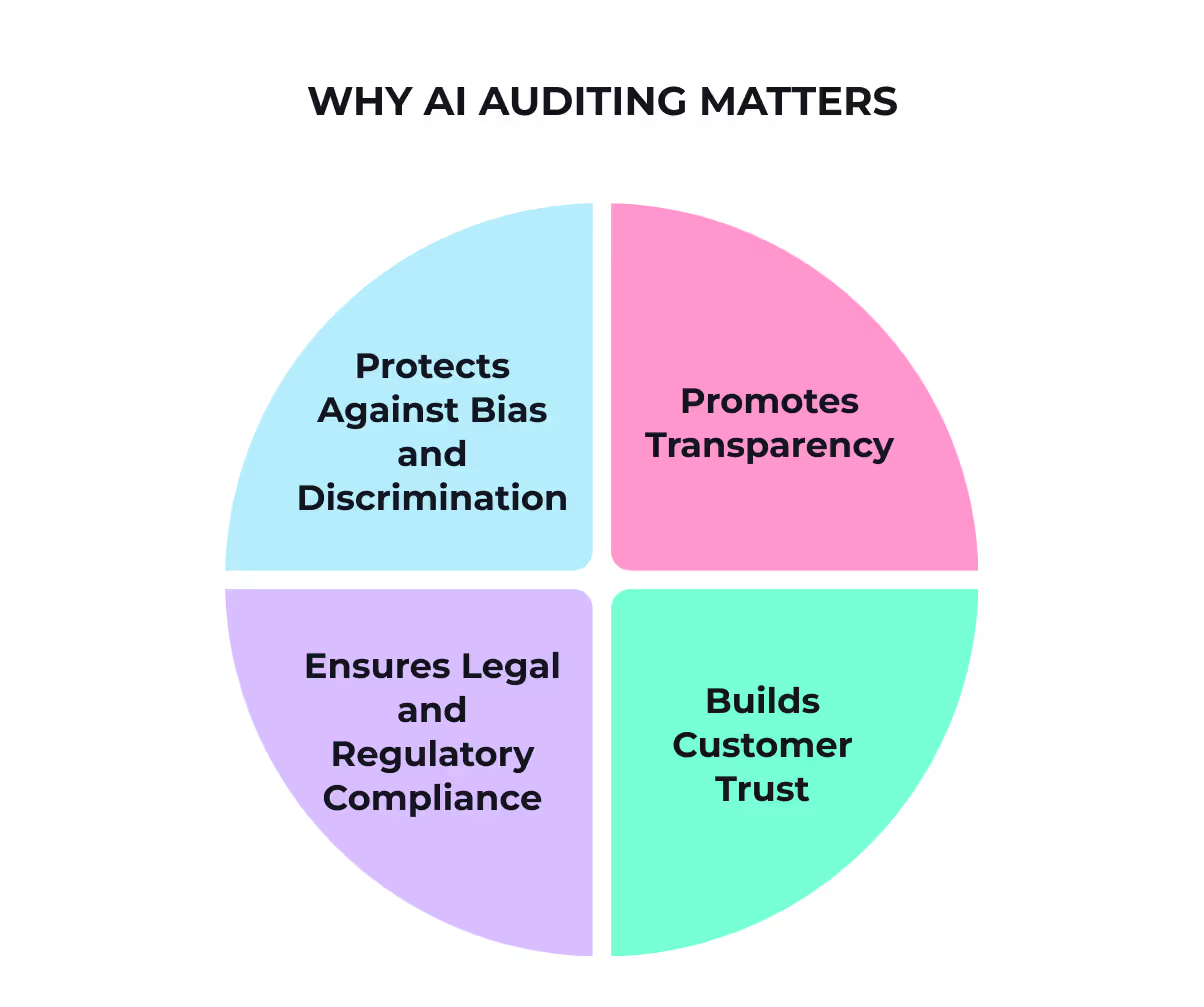

AI auditing isn’t just about ticking compliance boxes—it’s a powerful tool to reduce risks, improve transparency, and build trust. Below are a few reasons why it’s so important for any business:

AI systems can unintentionally reflect or even amplify societal biases. For example, some hiring algorithms have favored male candidates over equally qualified females due to biased training data. Regular audits help identify and address these issues, ensuring fairness and preventing discrimination.

Auditing makes AI decisions traceable and understandable, which is essential in industries like healthcare or finance, where decisions can have a big impact. With clear and explainable processes, businesses can build confidence in their AI systems.

Governments around the world are stepping up AI regulations, and staying prepared is more important than ever. Here’s what you need to know:

By understanding these shifts, you can confidently navigate the evolving AI landscape and turn compliance into an opportunity for innovation.

Customers notice when businesses take responsibility. According to the Aziri AI Risk Report, in 2024, the number of Fortune 500 companies citing AI as risks in their financial reports surpassed 280 (Aziri AI, 2024). AI failures in 2023 came from lack of oversight and testing. A strong auditing process shows accountability, reassuring customers and stakeholders that your AI works ethically and effectively.

By embracing AI audits, you’re not just meeting regulations—you’re setting a standard for innovation, integrity, and trust that inspires confidence in both your team and your customers.

AI auditing is a powerful way to ensure your systems are performing at their best while staying ethical, legal, and reliable. It involves four key types of reviews, each designed to evaluate different aspects of your AI. Here’s a clear breakdown:

These focus on the foundation of your AI—the algorithm itself. Key areas include:

This goes beyond functionality, looking at how the system aligns with societal values and fairness.

Staying compliant is crucial in today’s data-driven world. This audit ensures you’re meeting key regulations, such as:

Effective AI systems rely on solid internal processes as their foundation. This audit examines:

By understanding and applying these audits, you can build AI systems that are not only effective but also trustworthy and impactful.

A robust AI audit examines multiple critical dimensions to ensure compliance, fairness, and overall performance integrity. Each step plays a vital role in building trust, reducing risks, and creating reliable AI systems.

Auditors evaluate whether the data used to train the AI is balanced, representative of the intended population, and ethically sourced. They also check for potential issues like missing data, skewed distributions, or hidden biases, as these are common causes of AI failures. High-quality data lays the foundation for trustworthy AI systems.

Is the AI model predictable, consistent, and transparent in its decision-making? Auditors analyze how the algorithm behaves under different scenarios, including less common or extreme cases, to ensure it produces rational and coherent outcomes. Special attention is given to identifying anomalies, unintended behaviors, or weak spots that could undermine the model's reliability in real-world applications.

What processes and safeguards are in place to handle risks like bias, discrimination, or false positives? Auditors look for proactive measures, such as fairness checks, testing for edge cases, and systems designed to adapt to unexpected inputs. This step ensures the AI remains resilient, adaptable, and capable of minimizing harm, even in complex or unpredictable situations.

Are you maintaining thorough records, including audit logs, model cards, and decision-making frameworks? Transparent and well-organized documentation is crucial not only for compliance with regulations but also for building trust with stakeholders and regulators. Documentation provides a clear record of how decisions are made, how risks are managed, and how the system evolves over time, ensuring accountability at every stage.

By addressing these dimensions with care and precision, an AI audit ensures that your systems are not only effective but also ethical, transparent, and future-proof.

Auditing AI systems can feel complex, but the right mix of internal and external resources makes it manageable—and effective.

“The risks posed by AI systems are becoming increasingly significant as AI adoption accelerates across industry and society,” said Peter Slattery, a researcher at MIT FutureTech

By combining these resources, you can create a strong, transparent approach to AI auditing—one that supports trust, accountability, and long-term success.

AI is revolutionizing industries, and with that comes the growing need for thorough audits to ensure these systems are accurate, fair, and trustworthy. According to Alex Singla, McKinsey partner and co-leader, “20 percent or less of gen-AI-produced content is checked before use.” Let’s look at some key areas in which industries AI auditing makes an impact:

This shows just how essential auditing has become to build trust and confidence in AI-powered systems.

AI Auditing and Regulation

Regulatory bodies are stepping up to ensure AI is used responsibly—and that’s great news for everyone. Here’s what’s happening:

Proactive audits aren’t just about avoiding fines or reputational risks—they’re about fostering innovation in a way that’s safe, ethical, and future-ready. With the right approach, businesses can stay ahead of regulations while building trust and driving progress. Let’s embrace these changes as an opportunity to lead with confidence.

Organizations can tackle AI compliance with confidence by leveraging the right tools and frameworks:

Want to simplify AI compliance? Start here:

Unchecked AI systems can harm more than they help—think bias, privacy breaches, and lost trust. But auditing isn’t just about avoiding risks; it’s about unlocking the full potential of AI while building trust in the process.

Embedding audits into every phase of the AI lifecycle empowers organizations to stay compliant, improve efficiency, and adapt to evolving regulations. Remember, proactive beats reactive.

Q: What is an AI audit?

An AI audit is a comprehensive review of an organization’s artificial intelligence systems and processes. It involves evaluating the design, development, implementation, and ongoing use of AI to identify potential risks and ensure compliance with ethical standards and regulations.

Q: Why is it important to conduct an AI audit?

Conducting an AI audit allows organizations to proactively identify potential risks and take steps to address them before they become major issues. It also helps build trust in the use of AI and demonstrates a commitment to ethical practices. In addition, many industries are now subject to regulations regarding the use of AI, making audits necessary for compliance purposes.

Q: What steps can organizations take to promote ethical and responsible AI use?

Organizations can ensure ethical and responsible AI practices by implementing clear guidelines for the development, deployment, and use of AI systems. This includes conducting regular audits to identify potential biases or risks in the system, as well as involving diverse stakeholders in the decision-making process to minimize any unintentional harm. It is also important for organizations to continuously monitor and update their AI systems to adapt to changing circumstances and mitigate potential issues.

Moreover, organizations should prioritize transparency in their AI processes, providing clear explanations on how decisions are made and being open about any limitations or biases within the system. Additionally, regularly engaging with experts in AI ethics and seeking feedback from impacted communities can help organizations identify and address any ethical concerns that may arise.